准备工作

内核性能

cat >> /etc/sysctl.conf<<EOF

net.ipv4.ip_forward=1

net.bridge.bridge-nf-call-iptables=1

net.ipv4.neigh.default.gc_thresh1=4096

net.ipv4.neigh.default.gc_thresh2=6144

net.ipv4.neigh.default.gc_thresh3=8192

EOF

开启ipvs

[root@kubernetesmaster ~]# uname -r

5.3.0-1.el7.elrepo.x86_64

[root@kubernetesmaster ~]# cat /etc/sysconfig//modules/ipvs.modules

#!/bin/bash

module=(ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

ip_vs_lc

br_netfilter

nf_conntrack)

for kernel_module in ${module[@]};do

/sbin/modinfo -F filename $kernel_module |& grep -qv ERROR && echo $kernel_module >> /etc/modules-load.d/ipvs.conf || :

done

ipvs_modules_dir="/usr/lib/modules/5.3.0-1.el7.elrepo.x86_64/kernel/net/netfilter/ipvs"

for i in `ls $ipvs_modules_dir | sed -r 's#(.*).ko#\1#'`; do

/sbin/modinfo -F filename $i &> /dev/null

if [ $? -eq 0 ]; then

/sbin/modprobe $i

fi

done

[root@kubernetesmaster ~]# source /etc/sysconfig//modules/ipvs.modules

[root@kubernetesmaster modules]# lsmod | grep ip_vs

ip_vs_wrr 16384 0

ip_vs_wlc 16384 0

ip_vs_sh 16384 0

ip_vs_sed 16384 0

ip_vs_rr 16384 0

ip_vs_pe_sip 16384 0

nf_conntrack_sip 32768 1 ip_vs_pe_sip

ip_vs_ovf 16384 0

ip_vs_nq 16384 0

ip_vs_mh 16384 0

ip_vs_lc 16384 0

ip_vs_lblcr 16384 0

ip_vs_lblc 16384 0

ip_vs_ftp 16384 0

ip_vs_fo 16384 0

ip_vs_dh 16384 0

ip_vs 155648 30 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_ovf,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_pe_sip,ip_vs_wrr,ip_vs_lc,ip_vs_mh,ip_vs_sed,ip_vs_ftp

nf_nat 40960 3 iptable_nat,xt_MASQUERADE,ip_vs_ftp

nf_conntrack 147456 6 xt_conntrack,nf_nat,nf_conntrack_sip,nf_conntrack_netlink,xt_MASQUERADE,ip_vs

nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs

libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs

数值根据实际环境自行配置,最后执行sysctl -p保存配置。

所有节点都要写入hosts

以下都要在所有节点上执行

- 准备docker yum仓库

- 准备k8s yum仓库

- 准备 epel yum仓库

配置docker的yum库

cd /etc/yum.repos.d/

wget https://mirrors.aliyun.com/dockerce/linux/centos/docker-ce.repo

配置k8s的yum库

cd /etc/yum.repos.d/

cat << EOF > kubernetes.repo

[kubernetes]

name=kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=0

enabled=1

EOF

配置epel的yum库

cat > /etc/yum.repos.d/epel.repo << EOF

[epel]

name=Extra Packages for Enterprise Linux 7 - $basearch

baseurl=https://mirrors.tuna.tsinghua.edu.cn/epel/7/$basearch

#mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=epel-7&arch=$basearch

failovermethod=priority

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

[epel-debuginfo]

name=Extra Packages for Enterprise Linux 7 - $basearch - Debug

baseurl=https://mirrors.tuna.tsinghua.edu.cn/epel/7/$basearch/debug

#mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=epel-debug-7&arch=$basearch

failovermethod=priority

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

gpgcheck=1

[epel-source]

name=Extra Packages for Enterprise Linux 7 - $basearch - Source

baseurl=https://mirrors.tuna.tsinghua.edu.cn/epel/7/SRPMS

#mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=epel-source-7&arch=$basearch

failovermethod=priority

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

gpgcheck=1

EOF

以下都要在所有节点上执行

- docker安装

- kubeadm部署

yum -y install kubeadm-1.15.0-0.x86_64 kubectl-1.15.0-0.x86_64 kubelet-1.15.0-0.x86_64 kubernetes-cni-0.7.5-0.x86_64

swap没关的话就忽略swap参数

vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

开机启动kubelet

systemctl enable kubelet

k8s安装时的错误:

#如果有如下报错:

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory

解决方法:

安装bridge-util软件,加载bridge模块,加载br_netfilter模块

yum install -y bridge-utils.x86_64

modprobe bridge

modprobe br_netfilter

[ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

执行

sysctl -w net.ipv4.ip_forward=1

没有文件 /proc/sys/net/bridge/bridge-nf-call-iptables

cat > /etc/rc.sysinit << EOF

#!/bin/bash

for file in /etc/sysconfig/modules/*.modules ; do

[ -x $file ] && $file

done

EOF

cat > /etc/sysconfig/modules/br_netfilter.modules << EOF

modprobe br_netfilter

EOF

chmod 755 /etc/sysconfig/modules/br_netfilter.modules

lsmod |grep br_netfilter

重启后

echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

安装kubernetes

kubeadm init --kubernetes-version=v1.15.2 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-apiserver:v1.15.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers), error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-controller-manager:v1.15.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers), error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-scheduler:v1.15.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers), error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-proxy:v1.15.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers), error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/pause:3.1: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers), error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/etcd:3.3.10: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers), error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/coredns:1.3.1: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers), error: exit status 1

老方法 曲线救国:

docker pull mirrorgooglecontainers/kube-apiserver:v1.15.2

docker pull mirrorgooglecontainers/kube-controller-manager:v1.15.2

docker pull mirrorgooglecontainers/kube-scheduler:v1.15.2

docker pull mirrorgooglecontainers/kube-proxy:v1.15.2

docker pull mirrorgooglecontainers/pause:3.1

docker pull mirrorgooglecontainers/etcd:3.3.10

docker pull mirrorgooglecontainers/coredns:1.3.1

可能我现在1.15.2太新了 曲线失败 尝试老一点的版本 1.15.0 过几天再试试:

kubeadm init --kubernetes-version=v1.15.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-apiserver:v1.15.0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-controller-manager:v1.15.0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-scheduler:v1.15.0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-proxy:v1.15.0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/pause:3.1: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/etcd:3.3.10: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/coredns:1.3.1: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

docker pull mirrorgooglecontainers/kube-apiserver:v1.15.0

docker pull mirrorgooglecontainers/kube-controller-manager:v1.15.0

docker pull mirrorgooglecontainers/kube-scheduler:v1.15.0

docker pull mirrorgooglecontainers/kube-proxy:v1.15.0

docker pull mirrorgooglecontainers/pause:3.1

docker pull mirrorgooglecontainers/etcd:3.3.10

docker pull mirrorgooglecontainers/coredns:1.3.1

这个版本都拉下来了

[root@kubernetesmaster docker]# docker tag eb516548c180 k8s.gcr.io/coredns:1.3.1

[root@kubernetesmaster docker]# docker tag 2c4adeb21b4f k8s.gcr.io/etcd:3.3.10

[root@kubernetesmaster docker]# docker tag da86e6ba6ca1 k8s.gcr.io/pause:3.1

[root@kubernetesmaster docker]# docker tag 201c7a840312 k8s.gcr.io/kube-apiserver:v1.15.0

[root@kubernetesmaster docker]# docker tag 8328bb49b652 k8s.gcr.io/kube-controller-manager:v1.15.0

[root@kubernetesmaster docker]# docker tag 2d3813851e87 k8s.gcr.io/kube-scheduler:v1.15.0

[root@kubernetesmaster docker]# docker tag d235b23c3570 k8s.gcr.io/kube-proxy:v1.15.0

kubeadm init --kubernetes-version=v1.15.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12

忽略错误

kubeadm init --kubernetes-version=v1.15.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=all

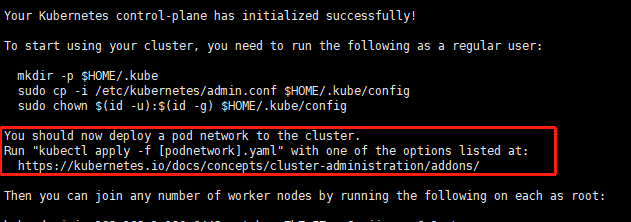

成功后会再控制台打印这样的输出

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.120:6443 --token 9xnm1x.rh79xwavbdi7at4a

--discovery-token-ca-cert-hash sha256:1efc0b8c266a7494105a93684a571ff6d2bec596c1fcde9347cd569f21cf3f7b

初始化完成后

记住节点要加入的token

9xnm1x.rh79xwavbdi7at4a \ --discovery-token-ca-cert-hash sha256:1efc0b8c266a7494105a93684a571ff6d2bec596c1fcde9347cd569f21cf3f7b

ps

到这里可以打个snapshot用来回滚了

master部署网络插件flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl命令自动补全

yum install bash-completion*

写入环境变量

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

各节点利用token部署

kubeadm join 192.168.1.120:6443 --token 9xnm1x.rh79xwavbdi7at4a

--discovery-token-ca-cert-hash sha256:1efc0b8c266a7494105a93684a571ff6d2bec596c1fcde9347cd569f21cf3f7b

默认token的有效期为24小时,当过期之后,该token就不可用了,以后加入节点需要新token

master重新生成新的token

[root@kubernetesmaster ~]# kubeadm token create

905hgq.1akgmga715dzooxo

[root@kubernetesmaster ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

905hgq.1akgmga715dzooxo 23h 2019-06-23T15:18:24+08:00 authentication,signingsystem:bootstrappers:kubeadm:default-node-token

获取ca证书sha256编码hash值

[root@kubernetesmaster ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

2db0df25f40a3376e35dc847d575a2a7def59604b8196f031663efccbc8290c2

利用新token加入集群

kubeadm join 192.168.1.120:6443 --token 905hgq.1akgmga715dzooxo

--discovery-token-ca-cert-hash sha256:2db0df25f40a3376e35dc847d575a2a7def59604b8196f031663efccbc8290c2

查看各节点是否就绪

[root@kubernetesmaster ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

kubernetesmaster Ready master 3m1s v1.15.0

kubernetesnode1 Ready72s v1.15.0

kubernetesnode2 Ready54s v1.15.0

kubernetesnode3 Ready

如果未就绪

执行

journalctl -f

也可以过滤kubelet

journalctl -f -u kubelet

如果查看发现

KubeletNotReady runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

解决:

mkdir /etc/cni/net.d/

vim /etc/cni/net.d/10-flannel.conf

{

"name": "cbr0",

"type": "flannel",

"delegate": {

"isDefaultGateway": true

}

}

2019-09-21

今天重新配置集群,之前的网络问题终于找到原因了

master安装完以后 命令行输出了这句话

紧接着在master节点部署网络插件flannel,这时可以通过kubectl命令来安装,即:

kubectl apply -f kube-flannel.yml

注意:kube-flannel.yml文件,可以在https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml获取到,当然文章最后提供的安装包里面也有该文件的。

此时可以查看master的状态,即输入如下命令:

kubectl get nodes

可见看到master的状态为Ready,即表明master节点部署成功!

安装dashboard:

安装

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

创建访问账户

使用 Kubernetes 的服务帐户机制创建一个新用户,授予该用户管理权限,并使用绑定到该用户的承载令牌登录到 dashboadr web 界面。这里主要有以下几个步骤:

创建服务帐户和集群角色绑定

获取用户登录 Token

创建导入浏览器的 .p12 文件

创建服务帐户

cat > dashboard_service_account_admin.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

EOF

kubectl apply -f dashboard_service_account_admin.yaml

创建集群角色绑定

cat > dashboard_cluster_role_binding_admin.yaml << EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

EOF

kubectl apply -f dashboard_cluster_role_binding_admin.yaml

获取用户登录 Token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') > admin-token.yaml && cat admin-token.yaml

输出如下:(记录输出的 token 信息即可)

Name: admin-user-token-gcdtv

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 64f6798d-a550-4366-a53c-bef75476ad95

Type: kubernetes.io/service-account-token

Data

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWdjZHR2Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2NGY2Nzk4ZC1hNTUwLTQzNjYtYTUzYy1iZWY3NTQ3NmFkOTUiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.eyQtdyTMQa3o-tgc35QRJLoPjGbzilJW78HAUN4hoqdIFa4Pdpus9odxVW0fAsqvyLkhhLllI0z-P4WAw8tPTxxzLG1GEnh3k6JhAPnT073CsEdweDEpNjkzeCjWNoJgT1wRyXdK_CNLUalQ0R5nS1z6wYOM_IvRReja0fy8YLCVa4WJe7-u7iyVAzonsXbdo9DlUpr7MWLTMec0yoaTRAirFJ16KT0BIv_EuT7O0NdNiTA1pSw5bGa3-OG4lTE7aK7FoM1DblE8x04SGQMcIg-RY8rLdLeclKT_CrwM9JbuovciI_ONL8oaV8Vpmdi-cU5J5VOPGanZ3hpcfiKhDA

ca.crt: 1025 bytes

namespace: 11 bytes

创建导入浏览器的 .p12 证书文件

grep 'client-certificate-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.crt

grep 'client-key-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.key

openssl pkcs12 -export -clcerts -inkey kubecfg.key -in kubecfg.crt -out kubecfg.p12 -name "kubernetes-web-client"

输出如下:(记录输入的证书密码,登录时导入证书会用到)

Enter Export Password: // 输入证书密码

Verifying - Enter Export Password: // 输入证书密码

访问 kubernetes-dashboard 的 UI 界面

导入证书

在访问 kubernetes-dashboard 的 UI 界面前,首先需下载刚刚生成的 kubecfg.p12 证书文件并导入浏览器

访问界面

访问 https://<MASTER_IP>:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

选择令牌,输入刚刚记录的 token 即可