首先创建zipkin的pod和service

zipkin-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: zipkin-svc

namespace: dev

labels:

name: zipkin-svc

spec:

selector:

name: zipkin

type: NodePort

ports:

- name: zipkin

port: 9411

targetPort: 9411

nodePort: 30181

protocol: TCP

zipkin.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: zipkin

namespace: dev

labels:

name: zipkin

spec:

replicas: 1

template:

metadata:

labels:

name: zipkin

spec:

terminationGracePeriodSeconds: 300

containers:

- name: zipkin

image: openzipkin/zipkin

imagePullPolicy: Always

env:

- name: STORAGE_TYPE

value: "mysql"

- name: MYSQL_DB

value: "xxxx"

- name: MYSQL_USER

value: "xxxx"

- name: MYSQL_PASS

value: "xxxx"

- name: MYSQL_HOST

value: "xxxx"

- name: MYSQL_TCP_PORT

value: "xxxx"

ports:

- containerPort: 9411

protocol: TCP

volumeMounts:

- mountPath: /etc/localtime

name: localtime

readOnly: true

- mountPath: /etc/resolv.conf

name: resolv

readOnly: true

resources:

limits:

memory: "8Gi"

cpu: "4"

requests:

memory: "2Gi"

cpu: "1"

volumes:

- hostPath:

path: /etc/localtime

name: localtime

- hostPath:

path: /etc/resolv.conf

name: resolv

然后在mysql中创建表

CREATE TABLE IF NOT EXISTS zipkin_spans (

`trace_id_high` BIGINT NOT NULL DEFAULT 0 COMMENT 'If non zero, this means the trace uses 128 bit traceIds instead of 64 bit',

`trace_id` BIGINT NOT NULL,

`id` BIGINT NOT NULL,

`name` VARCHAR(255) NOT NULL,

`remote_service_name` VARCHAR(255),

`parent_id` BIGINT,

`debug` BIT(1),

`start_ts` BIGINT COMMENT 'Span.timestamp(): epoch micros used for endTs query and to implement TTL',

`duration` BIGINT COMMENT 'Span.duration(): micros used for minDuration and maxDuration query',

PRIMARY KEY (`trace_id_high`, `trace_id`, `id`)

) ENGINE=InnoDB ROW_FORMAT=COMPRESSED CHARACTER SET=utf8 COLLATE utf8_general_ci;

ALTER TABLE zipkin_spans ADD INDEX(`trace_id_high`, `trace_id`) COMMENT 'for getTracesByIds';

ALTER TABLE zipkin_spans ADD INDEX(`name`) COMMENT 'for getTraces and getSpanNames';

ALTER TABLE zipkin_spans ADD INDEX(`remote_service_name`) COMMENT 'for getTraces and getRemoteServiceNames';

ALTER TABLE zipkin_spans ADD INDEX(`start_ts`) COMMENT 'for getTraces ordering and range';

CREATE TABLE IF NOT EXISTS zipkin_annotations (

`trace_id_high` BIGINT NOT NULL DEFAULT 0 COMMENT 'If non zero, this means the trace uses 128 bit traceIds instead of 64 bit',

`trace_id` BIGINT NOT NULL COMMENT 'coincides with zipkin_spans.trace_id',

`span_id` BIGINT NOT NULL COMMENT 'coincides with zipkin_spans.id',

`a_key` VARCHAR(255) NOT NULL COMMENT 'BinaryAnnotation.key or Annotation.value if type == -1',

`a_value` BLOB COMMENT 'BinaryAnnotation.value(), which must be smaller than 64KB',

`a_type` INT NOT NULL COMMENT 'BinaryAnnotation.type() or -1 if Annotation',

`a_timestamp` BIGINT COMMENT 'Used to implement TTL; Annotation.timestamp or zipkin_spans.timestamp',

`endpoint_ipv4` INT COMMENT 'Null when Binary/Annotation.endpoint is null',

`endpoint_ipv6` BINARY(16) COMMENT 'Null when Binary/Annotation.endpoint is null, or no IPv6 address',

`endpoint_port` SMALLINT COMMENT 'Null when Binary/Annotation.endpoint is null',

`endpoint_service_name` VARCHAR(255) COMMENT 'Null when Binary/Annotation.endpoint is null'

) ENGINE=InnoDB ROW_FORMAT=COMPRESSED CHARACTER SET=utf8 COLLATE utf8_general_ci;

ALTER TABLE zipkin_annotations ADD UNIQUE KEY(`trace_id_high`, `trace_id`, `span_id`, `a_key`, `a_timestamp`) COMMENT 'Ignore insert on duplicate';

ALTER TABLE zipkin_annotations ADD INDEX(`trace_id_high`, `trace_id`, `span_id`) COMMENT 'for joining with zipkin_spans';

ALTER TABLE zipkin_annotations ADD INDEX(`trace_id_high`, `trace_id`) COMMENT 'for getTraces/ByIds';

ALTER TABLE zipkin_annotations ADD INDEX(`endpoint_service_name`) COMMENT 'for getTraces and getServiceNames';

ALTER TABLE zipkin_annotations ADD INDEX(`a_type`) COMMENT 'for getTraces and autocomplete values';

ALTER TABLE zipkin_annotations ADD INDEX(`a_key`) COMMENT 'for getTraces and autocomplete values';

ALTER TABLE zipkin_annotations ADD INDEX(`trace_id`, `span_id`, `a_key`) COMMENT 'for dependencies job';

CREATE TABLE IF NOT EXISTS zipkin_dependencies (

`day` DATE NOT NULL,

`parent` VARCHAR(255) NOT NULL,

`child` VARCHAR(255) NOT NULL,

`call_count` BIGINT,

`error_count` BIGINT,

PRIMARY KEY (`day`, `parent`, `child`)

) ENGINE=InnoDB ROW_FORMAT=COMPRESSED CHARACTER SET=utf8 COLLATE utf8_general_ci;

创建表sql文件在

https://github.com/openzipkin/zipkin/blob/master/zipkin-storage/mysql-v1/src/main/resources/mysql.sql

还是建议大家在官方文档中找资料,网上搜到的基本都是过时的。

这里也可以选择不使用mysql数据库,直接放内存里,优点是效率高,缺点是吃内存和重启丢失数据。

在springboot中添加依赖

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-zipkin</artifactId>

</dependency>

application:

application:

name: ${MY_POD_NAME}

zipkin:

# zipkin服务端地址

base-url: http://${ZIPKIN_DOMAIN_URI}/

sleuth:

sampler:

# 采集频率 1.0表示100%采集

percentage: 1.0

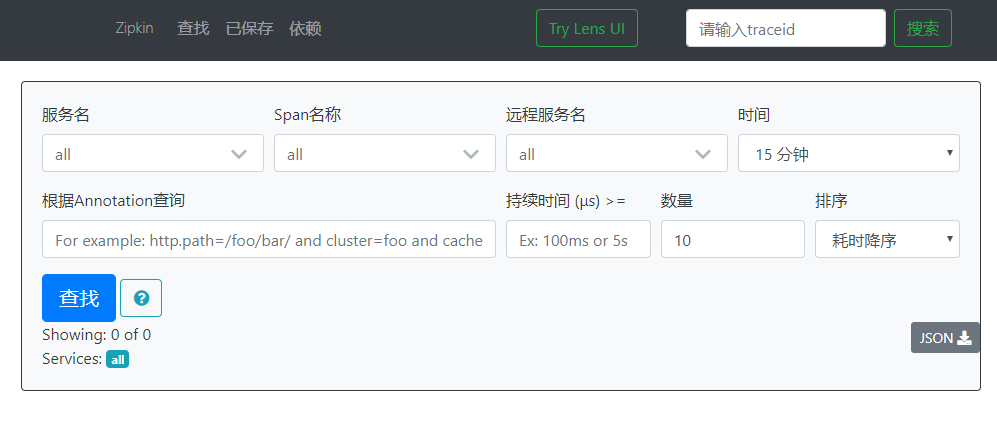

启动后可以打开zipkin地址查看调用情况了

这里有个tips:

zipkin会在日志中输出当前请求的traceid 建议在logback文件中将traceid输出到文件里,这样就可以搜索关键字排查问题了

logback示例

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<springProperty scope="context" name="springAppName" source="spring.application.name"/>

<!-- Example for logging into the build folder of your project -->

<property name="LOG_FILE" value="logs\\${springAppName}.log"/>

<!-- You can override this to have a custom pattern -->

<property name="CONSOLE_LOG_PATTERN" value="%d [%X{traceId}/%X{spanId}] [%thread] %-5level %logger{36} - %msg%n"/>

<!-- Appender to log to console -->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<!-- Minimum logging level to be presented in the console logs-->

<level>INFO</level>

</filter>

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- Appender to log to file -->

<appender name="flatfile" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<root level="INFO">

<appender-ref ref="console"/>

<!-- uncomment this to have also JSON logs -->

<appender-ref ref="flatfile"/>

</root>

</configuration>

宝剑锋从磨砺出,梅花香自苦寒来.